What tracing solves today — and why the model breaks for agentic AI

Distributed systems are now the backbone of every modern product: microservices, serverless functions, queues, caches, APIs, edge nodes, and cloud integrations. As systems grew more fragmented, the industry needed a way to understand how a single request moves across dozens or hundreds of components.

That is why distributed tracing became essential.

1. What Tracing Means for Distributed Systems Today

In today’s systems, tracing answers one timeless question:

Where did the time go?

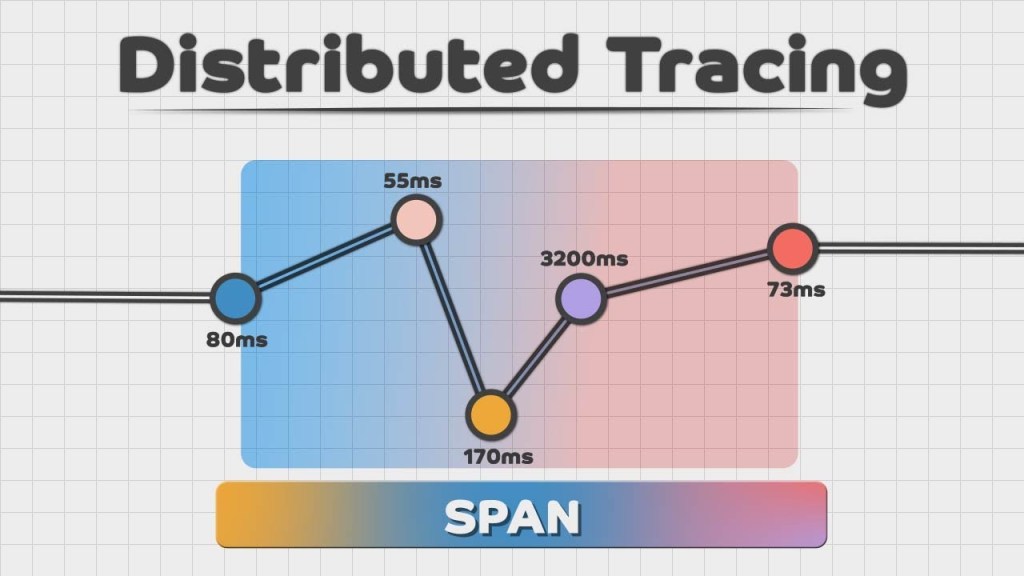

A trace represents the full path of a request as it travels through multiple components. Each hop is recorded as a span, showing:

- what operation ran

- where it ran

- how long it took

- which downstream calls it triggered

- where failures or retries occurred

A simplified example:

User Request

|

[ Gateway ] 12ms

|

[ Service A ] 41ms

| \

| \

| [ Cache ] 5ms

|

[ Service B ] 118ms ← bottleneck

This structure gives engineers the ability to:

- pinpoint bottlenecks

- visualize dependencies

- understand failure propagation

- debug cascading outages

- correlate logs and metrics around a timeline

In distributed systems, tracing is the narrative that explains system behavior.

2. Why This Model Begins to Break for Agentic AI Systems

Traditional tracing assumes systems behave like orchestrated pipelines.

But agentic AI does not work like a pipeline — it behaves like an adaptive, reasoning-driven graph.

Consider what an AI agent does during a single task:

- decomposes the goal

- generates hypotheses

- invokes tools

- evaluates outcomes

- retries with new strategies

- corrects itself

- branches into parallel reasoning paths

- discards or merges results

This produces non-linear execution, which traditional tracing was never designed to represent.

In distributed systems:

Tracing answers what happened and where it happened.

In agentic AI systems:

We also need to know why it happened.

Example contrast:

Distributed span:

POST /inventory — 18ms

Agentic reasoning span:

reasoning.step = "refine hypothesis"

confidence = 0.42

pruned_branches = ["approach_B"]

selected_strategy = "rewrite_query"

Current tracing tools have:

- no concept of hypotheses

- no representation for branching decisions

- no understanding of reflection loops

- no way to correlate memory or context updates

- no mechanism to show alternative paths the agent considered

Agentic systems produce cognitive workflows, not service workflows.

3. How Observability Questions Will Evolve

Today, observability teams ask:

✔️ Where is the latency coming from?

✔️ Which service is failing?

✔️ What dependencies were involved?

✔️ What was the critical path?

✔️ What retry or timeout behavior occurred?

In an agentic world, the questions become:

🔄 Reasoning-Level Questions

- Why did the agent choose this plan?

- What alternative strategies were considered?

- What caused the agent to retry or abandon a branch?

🧠 Cognitive Workflow Questions

- What did the agent “believe” or “assume” at each step?

- Which memory or context influenced the decision?

- How did the agent refine or reject hypotheses?

⚠️ Safety & Reliability Questions

- Was the reasoning grounded in correct data?

- Which step introduced hallucination risk?

- How can this reasoning path be made reproducible?

⚙️ Tool Interaction Questions

- Did the agent misuse or overuse a tool?

- How did tool results affect downstream reasoning?

- What is the agent’s cost/latency footprint per reasoning path?

These are observability questions we have never had to ask before.

Coming Up Next (Part 2)

In the next post, we’ll dive into what Tracing 2.0 must look like —

a new model capable of capturing machine reasoning, not just system behavior.

📚 References & Credits

- Google Dapper (2010)

- OpenTelemetry Specification

- Uber Jaeger Architecture

- W3C TraceContext

- Tree of Thoughts (Yao et al.), Graph of Thoughts (Arora et al.)

- OpenAI Agentic Framework, Anthropic Constitutional AI

Image: https://www.youtube.com/watch?v=XYvQHjWJJTE

Leave a comment